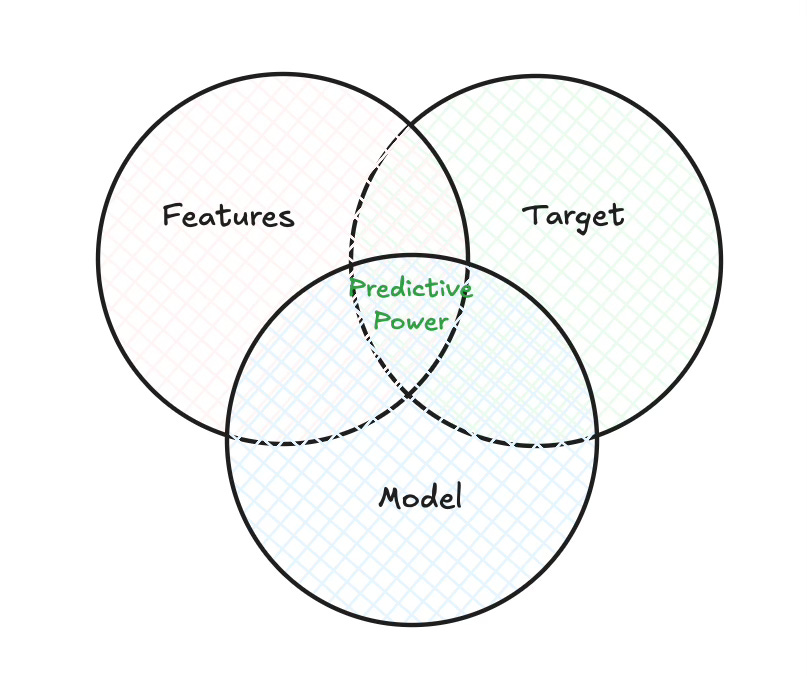

The 3 Pillars of Predictive Power

Features. Target. Model. Miss one, and the whole thing falls apart.

When I started building trading models, I spent most of my time tweaking features or testing different model architectures. I thought the answer was in the indicators or the algorithms.

But over time, I realized something else.

Even with clean features and a solid model, my results were still inconsistent. Some strategies looked great in backtest and fell apart in live trading. Others gave signals that didn’t make any sense. The issue was the target.

It took me a while to understand this, but I believe it now.

If you want to build real predictive power in trading, you need three things to work together. Not just one. Not just two. All three.

👉 Good features. A meaningful target. And a model that fits the problem.

If one is off, you’re just optimizing noise.

If all three align, that’s when the strategy starts to work.

Let’s go through them.

1. Good Features: Feeding the Model With Useful Information

When your model isn’t performing well, it’s tempting to blame the architecture. But more often than not, the problem comes from what you’re feeding it.

Good features are not about throwing dozens of indicators into a dataframe and hoping something sticks. It’s about capturing real structure from the market. Momentum, volatility, trend stability, order flow pressure… These are the kinds of signals that matter.

But building good features takes time. That’s why I created Quantreo, a Python library with dozens of ready-to-use features specifically designed for trading. It includes statistical, technical, and custom indicators that I’ve tested in real-world strategies.

You can use it as a starting point, then adapt it to your own ideas.

In short, if your features aren’t aligned with your target or your market regime, no model can save you. But if they are, you give your model a real shot at learning something meaningful.

There’s no universal feature set. What works for a volatility model won’t necessarily help in a directional setup.

My tip: don’t always use the same set of features for every problem. Choose them based on the target you’re trying to predict, and the type of model you’re using.

2. A Good Target: The Signal You Actually Want to Capture

Most people jump straight into modeling without asking the most important question: what exactly am I trying to predict ?

That question sounds simple, but it’s the foundation of everything. A badly defined target means your model will chase the wrong signal, no matter how clean your features are.

A good target is:

Aligned with your strategy goal (e.g. direction, magnitude, volatility)

Statistically stable (not just rare outliers or noisy randomness)

For example, if you’re trying to build a breakout strategy, your target could be a directional move only after a volatility contraction. If you're working on risk forecasting, you might want to predict the future volatility instead of just direction.

My tip: I never start by saying "I'll use this target". I start by identifying a problem or a hypothesis in the market, then I build a target around that.

Don't just label your data because everyone uses a certain metric. Build something that reflects the behavior you actually want your model to detect.

3. Choose a Model That Matches the Complexity

Sometimes I see people build extremely advanced models, deep neural nets, stacked ensembles, and then wonder why it doesn’t work. Most of the time, the issue isn’t the model. It’s the mismatch between the model’s complexity and the problem’s structure.

When I create a model, I don’t ask “What’s the best model out there?”

I ask: “What’s the structure of my inputs and targets?”

If I’m using flat features, aggregated statistics, volatility measures, moving averages. I’ll probably go with a tabular model like XGBoost or ML classifier.

If I’m using sequences, like 30 steps of volatility or raw price moves, then a CNN or LSTM makes more sense.

The same applies to the complexity of the signal. If your label is extremely noisy or regime-dependent, throwing more model layers at it won’t help. You need to simplify the problem first. Maybe condition it. Maybe change the target.

Good modeling isn’t about being fancy. It’s about matching the model to the job.

Don’t let the toolbox decide your structure. Let the data and problem guide the tool you choose.

4. Three Examples to Spot the Alignment (or Lack of It)

Let’s wrap with a few examples to see what happens when features, target, and model aren’t aligned, and when they are.

Example 1 - Mismatch: Great Features, Wrong Target

Goal: Predict the trend of an asset

Features: Medium and long-term indicators like moving averages, volatility, volume → good

Target: Variation over the next 2 minutes → problem

Even if your features are solid, the target here is completely off. You’re trying to predict micro-movements using slow features. The model will try to guess noise.

Model: ML classifier → fine for aggregated features

🛑 You built the right inputs, but asked the wrong question.

Example 2 - Mismatch: Wrong Model for the Data

Goal: Predict the trend of an asset

Features: Medium and long-term aggregated indicators → good

Target: Variation over the next week → good

Model: RNN (LSTM/GRU) → not ideal

Why not? Your features are aggregated, like 200-period volatility. Feeding 30 consecutive values of such features to an RNN means feeding 30 highly similar numbers. There’s no temporal richness for the model to exploit. A tabular model would work better here.

If you wanted to use an RNN, you’d need intra-bar volatility or raw sequences with meaningful time structure.

🛑 You picked a good question and features, but the wrong model for the job.

Example 3 - Everything Aligned

Goal: Predict the trend of an asset

Features: Medium and long-term indicators like moving averages, volume, volatility → good

Target: Next week’s return sign → good

Model: ML classifier (e.g., LightGBM, XGBoost) → good

All components are pointing in the same direction. The time horizon of the target matches the features, and the model is well-suited for the structure of the data.

✅ That’s when you get the best shot at finding a real, reproducible edge.

If you want to go deeper, build smarter features, understand signal reliability, and master techniques like triple-barrier labeling, model understanding, or feature conditioning, that’s exactly what we cover in ML4Trading.

🚀 Whether you're coding your first models or scaling a live strategy, ML4Trading gives you the tools, templates, and theory to build robust and intelligent trading systems.

Thanks for reading, now it’s your turn to build the brain of your strategy.

👏🏻👍🏻